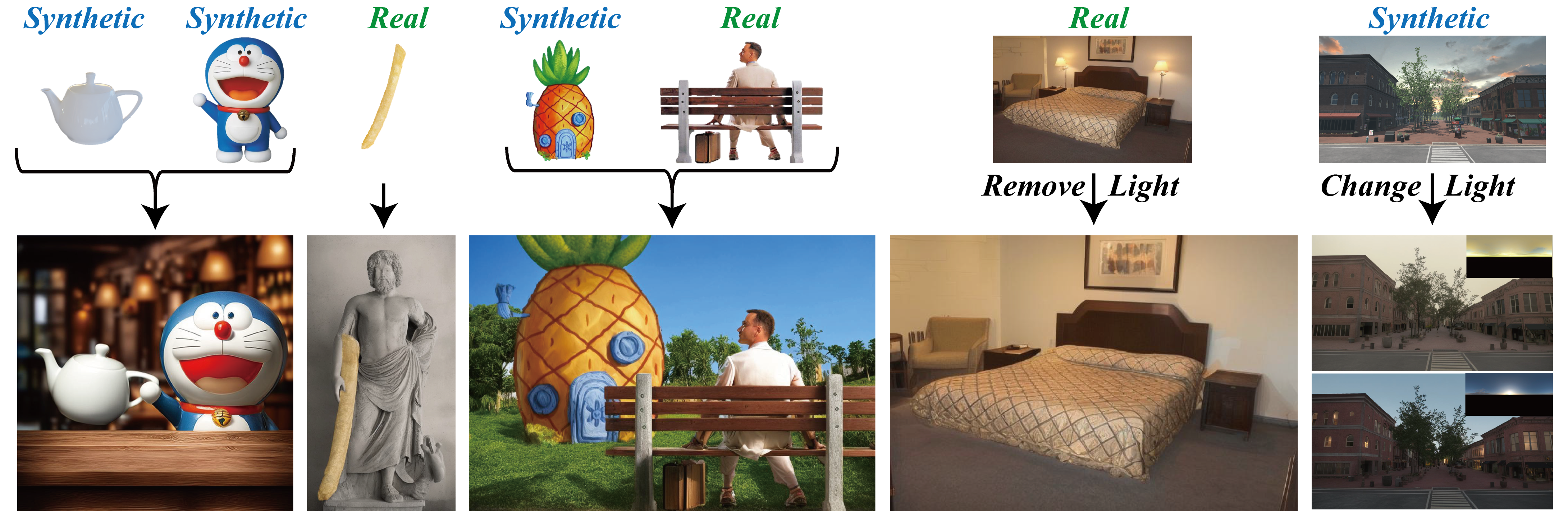

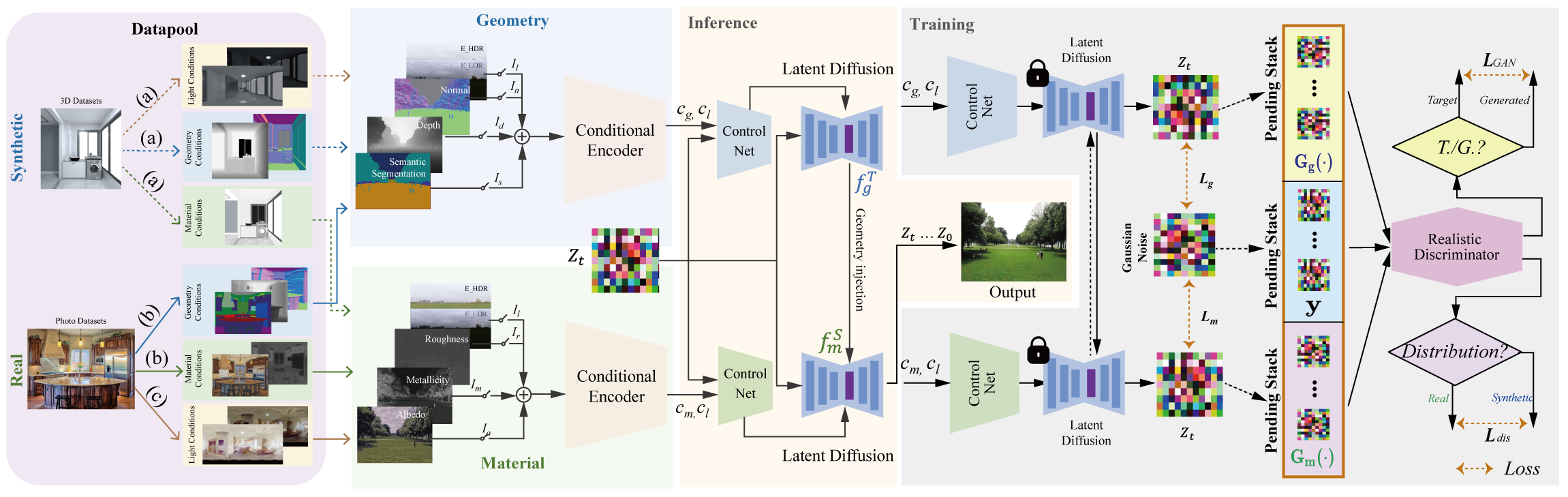

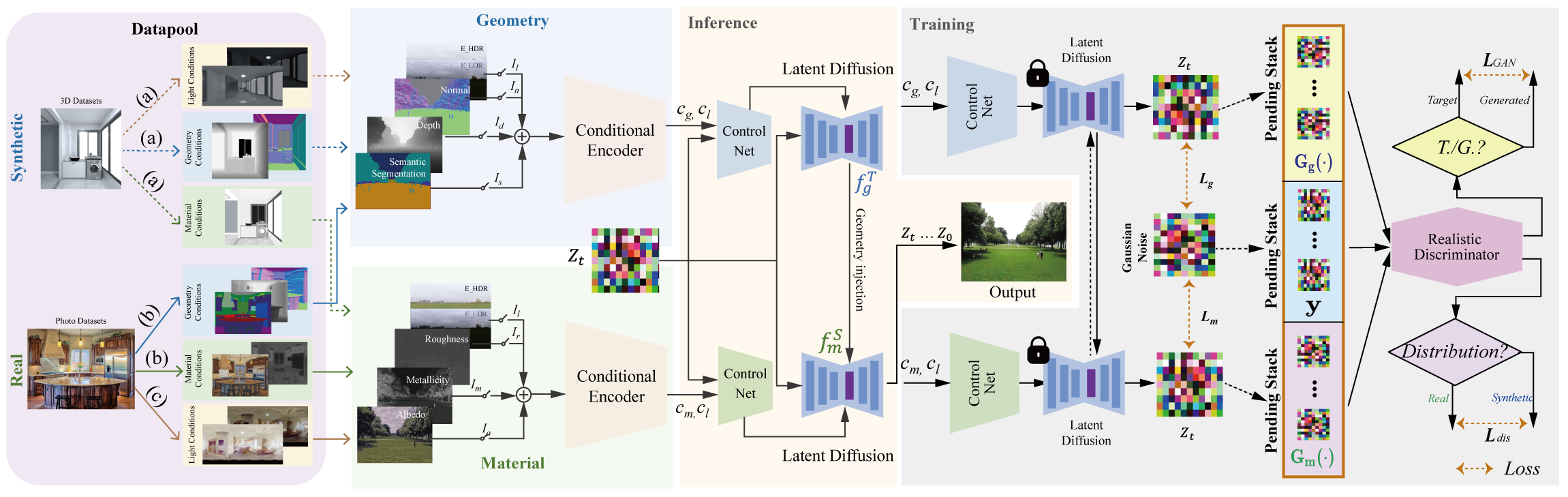

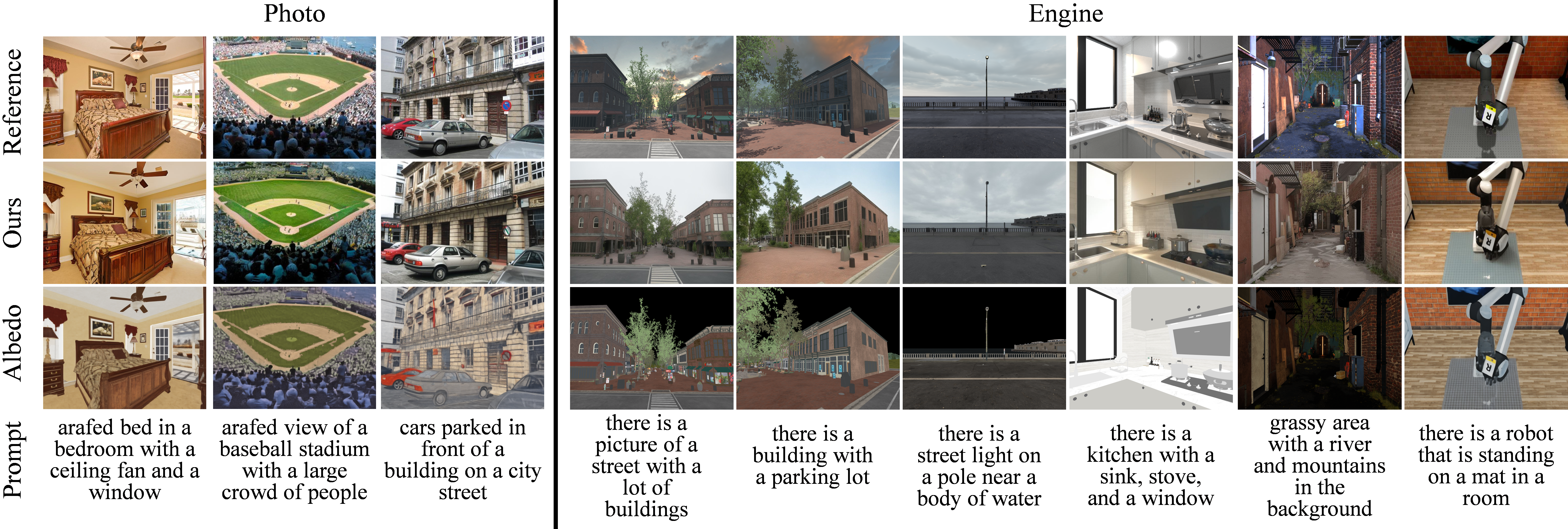

Realistic images are usually produced by simulating light transportation results of 3D scenes using rendering engines. This framework can precisely control the output but is usually weak at producing photo-like images. Alternatively, diffusion models have seen great success in photorealistic image generation by leveraging priors from large datasets of real-world images but lack affordance controls. Promisingly, the recent ControlNet enables flexible control of the diffusion model without degrading its generation quality. In this work, we introduce IntrinsicControlNet, an intrinsically controllable image generation framework that enables easily generating photorealistic images from precise and explicit control, similar to a rendering engine, by using intrinsic images such as material properties, geometric details, and lighting as network inputs. Beyond this, we notice that there is a domain gap between the synthetic and real-world datasets, and therefore, naively blending these datasets yields domain confusion. To address this problem, we present a cross-domain control architecture that extracts control information from synthetic datasets, and control and content information from real-world datasets. This bridges the domain gap between real-world and synthetic datasets, enabling the blending or editing of 3D assets and real-world photos to support various interesting applications. Experiments and user studies demonstrate that our method can generate explicitly controllable and highly photorealistic images based on the input intrinsic images.

BibTex Code Here